How do you turn your (not so) ordinary Raspberry Pi into AWS Echo device – communicating with its surroundings using the AWS Alexa module? How to ask her about the weather in London, to remotely turn lights off at home, or make an appointment with a dentist? How to extend Alexa (in practice) to any functionalities in a few steps? How to check if our audience is happy, sad or God forbid – terrified? These and other questions will be answered in the following article – the material was presented during the October DevForge conference in Białystok, as a part of the lecture “Tell me more – we build our own AWS Alexa on Raspberry Pi”.

A few words about Amazon Echo

AWS Echo is a device enabling voice support (made available through AWS Alexa) useful in daily work routine, entertainment or typical home activities (such as music playback, intelligent home control, arranging meetings, presenting weather forecasts and much more).

Would you like to have such a device at your home? Now it is possible, even without buying a brand new, shiny Amazon product. All you need is a Raspberry Pi (or in fact, actually an ordinary PC) and a bit of other equipment.

Building step by step our own AWS Alexa on Raspberry Pi:

1. Creating Developer account on Amazon

To get the ball rolling, we need to set up an account at developer.amazon.com and register our device. The entire process is described on the website – https://github.com/alexa/avs-device-sdk/wiki/Create-Security-Profile

After all of the steps followed, new device should be displayed as registered;

2. Raspberry Pi configuration

First step is to replace our Raspberry into the so-called “Alexa enabled device”, or simply a device that will be able to interact with Alexa’s service and with help of additional peripheral devices (microphone and speakers) interact with nearby environment. At this point, we assume that you have configured the OS on your Raspberry Pi, with for example Raspbian. If you have not already done so, check out – https://github.com/alexa/alexa-avs-sample-app/wiki/Setting-up -the-Raspberry-Pi.

Next, we have to install the Alexa’s SDK on our Raspberry and we will do it by following a simple tutorial from the official website of the project – https://github.com/alexa/avs-device-sdk/wiki

On the abovementioned github page you will find guides to Raspberry Pi, Ubuntu, macOS, Windows, iOS and Android. There is a lot to choose from, but we will focus our attention only on the Raspberry version, which is available here: https://github.com/alexa/avs-device-sdk/wiki/Raspberry-Pi-Quick-Start-Guide-with-Script

After following steps from the guide, your equipment will be ready for some demonstrative (demo) interactions with Alexa Voice Service. Try using the Sample App provided and ask for example what is the weather like now, or check what result will be presented after asking “Alexa, tell me a joke”.

3. Let’s form something more!

After initial recognition and playing with Alexa Voice Service, it is high time for the project that is a bit more ambitious. Whether conducting a lecture, talking with friends or browsing photos from the concert, wouldn’t it be exciting to know in what mood are your listeners? Of course it would be! Let’s try to model the application, (so-called “Alexa skill” – an ability that extends the set of default interactions prepared by Amazon, not only for AVS) which will automatically take a picture with the help of a camera connected to Raspberry Pi and examine mood of an audience.

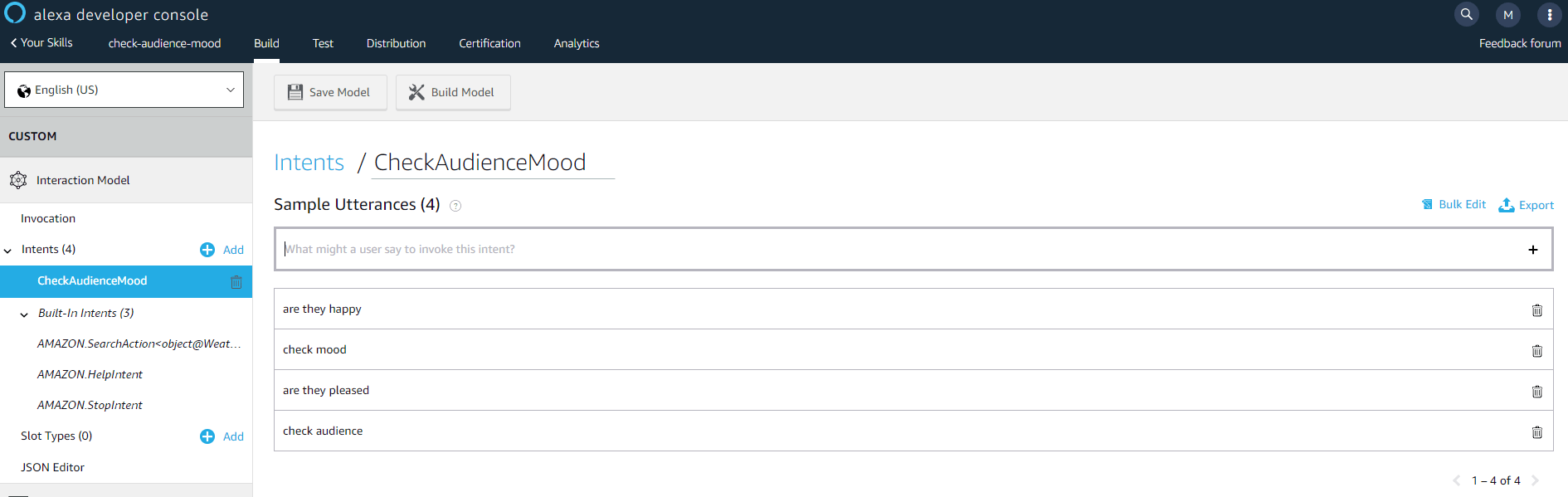

Before we start building the complete architecture – let’s shape Alex Skill, to enable it to react to our command (for example to trigger the serverless module offered by AWS, known as AWS Lambda). For this purpose, we need to log in again at developer.amazon.com to create a new, simple Skill, which will react to a few phrases (like asking for the mood of the audience) and Lambda will start to process the photo and publish results. A comprehensive description of how to create Skill and connect it with Lambda can be found here – https://developer.amazon.com/docs/custom-skills/host-a-custom-skill-as-an-aws-lambda-function.html (not mentioning dozens of additional YT videos, where the guides implement their own Skills in a few (ten) minutes live). Ultimately, we want our – let’s call it “Check Audience Mood” Skill be reactive to the following phrases (at this juncture called Intents):

We see that our Skill will react when we ask Alexa following phrases:

- are they happy

- check mood

- are they pleased

- check audience

I recommend here to perform a bit of “sandbox fun” with Intents, and Skills. Amazon provides a comprehensive documentation and gives a chance to test voice interactions – without a need to install solutions on physical devices.

Returning to our idea of checking an audience’s mood – the example project could look like this:

Let’s take a glance at components of this solution:

1. In the first step, we have to activate Alexa to use our Skill, by appropriately calling out Intent, via voice command i.e. “Alexa, ask – check audience mood – are they happy?” (Let’s be patient, Alexa sometimes has a problem with homonyms and does not always accurately, in 100% will recognize our order for the first time. In addition sometimes it can throw NPE from the Sample App … you have to try 🙂

2. After proper command recognition, Skill is to execute Lambda, in order to put message on the SQS queue.

3. We have a camera attached To Raspberry, which takes pictures using a script awaiting a message queued to SQS (of course, it can be done in other ways, e.g. opening a tunnel and “talking” with some micro-service, using ngork etc.). In my case it takes a photo using the fswebcam software, which we can force to do it, with the following line:

fswebcam -r 1280×720 –no-banner /home/pi/webcam/$DATE.jpg

Of course, DATE is a variable that is generated in the script to give photo a unique name. A photo taken is then loaded into buckets on S3 (roughly speaking – file hosting on AWS).

4. A photo object that lands in our dedicated S3 bucket has a set trigger that is to cause Lambda to execute, in which we pack most of the code needed to handle the sequence of recognizing human emotions and publishing the results. In brief:

- A photo object lands in S3 and automatically starts a dedicated Lambda

- In Lambda, we are able to fetch an image and forward it to the AWS Rekognition service, which will return identified objects from which we will retrieve faces and recognized moods, examplary script calling Rekognition in Python 2.7:

Next, we build a list containing information about emotions of people estimated by Rekognition (this service gives us response about the extracted data, along with the degree of confidence for a performed identification process.)

Having such a list, there is nothing else to do, but to build a legible entry from it, which we can then publish, for example on Twitter (in this article, I omit registering a developer account and utilizing the Twitter API, but it won’t be a blocker for anybody 🙂 ).

We are ready for rumble!

4. Let’s see our creation getting operational!

The completed set may look similar to the subsequent:

It’s fine enough to call Alexa and ask – “Alexa, ask Check Audience Mood – are they pleased?”. After a while, on the pre-configured Twitter account, a photo will appear, along with an audience moods examination included in text form:

To sum things up – analyzing photos or publishing them on Twitter is not a rocket science (or a brain surgery – if you know what I mean). However in case of implementing this on the AWS stack I can see two basic benefits:

- Time – whole modeling of this PoC takes no more time than two, chilled down evenings’ spent on it. You do not need to know anything in particular about neural networks (deep learning) to build quite interesting projects in a short time (I recommend you to look around for stimulating projects published by numerous enthusiasts!)

- Scale – Amazon in the matter of customers’ reach range, gives us a lot of superiority. This is because of the sheer number of technological partners and hence – the multitude of devices on how many of our solutions can be implemented (intelligent homes, cars, support for disabled people and many other areas) gives a pleasant dizziness when looking into the prospect of potential opportunities and benefits

I encourage you to experiment yourself and wish you an inspiring adventure with Amazon tools – create great things!